by Laure Vergeron | Aug 21, 2017 | Cloud-native apps, Tutorials

We welcome the following contributed tutorial:

Rom Freiman

It is always a victory for an open source project when a contributor takes the time to provide a substantial addition to it. Today, we’re very happy to introduce a Docker Registry – Zenko tutorial by GitHub user rom-stratoscale, also known as Rom Freiman, R&D Director at Stratoscale.

Thank you very much for making the Zenko community stronger, Rom! — Laure

by Laure Vergeron | Jun 13, 2017 | Data management, Tutorials

s3fs is an open source tool that allows you to mount an S3 bucket on a filesystem-like backend. It is available both on Debian and RedHat distributions. For this tutorial, we used an Ubuntu 14.04 host to deploy and use s3fs over Scality’s S3 Server.

by Laure Vergeron | May 5, 2017 | Cloud-native apps, Tutorials

Let’s explore how to write a simple Node.js application that uses the S3 API to write data to the Scality S3 Server. If you do not have the S3 Server up and running yet, please visit the Docker Hub page to run it easily on your laptop. First we need to create a list of the libraries needed in a file called package.json. When the node package manager (npm) is run, it will download each library for the application. For this simple application, we will only need the aws-sdk library.

Save the following contents in package.json

{

"name": "myAPP",

"version": "0.0.1",

"dependencies": {

"aws-sdk": ""

}

}

Now let’s begin coding the main application in a file called app.js with the following contents:

var aws = require('aws-sdk');

var ACCESS_KEY = process.env.ACCESS_KEY;

var SECRET_KEY = process.env.SECRET_KEY;

var ENDPOINT = process.env.ENDPOINT;

var BUCKET = process.env.BUCKET;

aws.config.update({

accessKeyId: ACCESS_KEY,

secretAccessKey: SECRET_KEY

});

var s3 = new aws.S3({

endpoint: ENDPOINT,

s3ForcePathStyle: true,

});

function upload() {

params = {

Bucket: BUCKET,

Key: process.argv[2],

Body: process.argv[3]

};

s3.putObject(params, function(err, data) {

if (err) {

console.log('Error uploading data: ', err);

} else {

console.log("Successfully uploaded data to: " + BUCKET);

}

});

}

if (ACCESS_KEY && SECRET_KEY && ENDPOINT && BUCKET && process.argv[2] && process.argv[3]) {

console.log('Creating File: ' + process.argv[2] + ' with the following contents:\n\n' + process.argv[3] + '\n\n');

upload();

} else {

console.log('\n[Error: Missing S3 credentials or arguments!\n');

}

This simple application will accept two arguments on the command-line. The first argument is for the file name and the second one is for the contents of the file. Think of it as a simple note taking application.

Now that the application is written, we can install the required libraries with npm.

npm install

Before the application is started, we need to set the S3 credentials, bucket, and endpoint in environment variables.

export ACCESS_KEY='accessKey1'

export SECRET_KEY=’verySecreyKey1'

export BUCKET='test'

export ENDPOINT='http://127.0.0.1:8000'

Please ensure that the bucket specified in the BUCKET argument exists on the S3 Server. If it does not, please create it.

Now we can run the application to create a simple file called “my-message” with the contents of “Take out the trash at 1pm PST”

node app.js 'my-message' 'Take out the trash at 1pm PST'

You should now see the file on the S3 Server using your favorite S3 Client:

I hope that this tutorial will help you get started quickly to create wonderful applications that use the S3 API to store data on the Scality S3 Server. For more code samples for different SDKs, please visit the Scality S3 Server GitHub .

by Laure Vergeron | Apr 18, 2017 | Cloud-native apps, Tutorials

Docker swarm is a clustering tool developed by Docker and ready to use with its containers. It allows to start a service, which we define and use as a means to ensure s3server’s continuous availability to the end user. Indeed, a swarm defines a manager and n workers among n+1 servers. We will do a basic setup in this tutorial, with just 3 servers, which already provides a strong service resiliency, whilst remaining easy to do as an individual. We will use NFS through docker to share data and metadata between the different servers.

You will see that the steps of this tutorial are defined as On Server, On Clients, On All Machines. This refers respectively to NFS Server, NFS Clients, or NFS Server and Clients. In our example, the IP of the Server will be 10.200.15.113, while the IPs of the Clients will be 10.200.15.96 and 10.200.15.97

Installing docker

Any version from Docker 1.13 onwards should work; we used Docker 17.03.0-ce for this tutorial.

On All Machines

On Ubuntu 14.04

The docker website has solid documentation.

We have chosen to install the aufs dependency, as recommended by Docker. Here are the required commands:

$> sudo apt-get update

$> sudo apt-get install linux-image-extra-$(uname -r) linux-image-extra-virtual

$> sudo apt-get install apt-transport-https ca-certificates curl software-properties-common

$> curl -fsSL https://download.docker.com/linux/ubuntu/gpg | sudo apt-key add -

$> sudo add-apt-repository "deb [arch=amd64] https://download.docker.com/linux/ubuntu $(lsb_release -cs) stable"

$> sudo apt-get update

$> sudo apt-get install docker-ce

On CentOS 7

The docker website has solid documentation. Here are the required commands:

$> sudo yum install -y yum-utils

$> sudo yum-config-manager --add-repo https://download.docker.com/linux/centos/docker-ce.repo

$> sudo yum makecache fast

$> sudo yum install docker-ce

$> sudo systemctl start docker

On Clients

Your NFS Clients will mount Docker volumes over your NFS Server’s shared folders. Hence, you don’t have to mount anything manually, you just have to install the NFS commons:

On Ubuntu 14.04

Simply install the NFS commons:

$> sudo apt-get install nfs-common

On CentOS 7

Install the NFS utils, and then start the required services:

$> yum install nfs-utils

$> sudo systemctl enable rpcbind

$> sudo systemctl enable nfs-server

$> sudo systemctl enable nfs-lock

$> sudo systemctl enable nfs-idmap

$> sudo systemctl start rpcbind

$> sudo systemctl start nfs-server

$> sudo systemctl start nfs-lock

$> sudo systemctl start nfs-idmap

On Server

Your NFS Server will be the machine to physically host the data and metadata. The package(s) we will install on it is slightly different from the one we installed on the clients.

On Ubuntu 14.04

Install the NFS server specific package and the NFS commons:

$> sudo apt-get install nfs-kernel-server nfs-common

On CentOS 7

Same steps as with the client: install the NFS utils and start the required services:

$> yum install nfs-utils

$> sudo systemctl enable rpcbind

$> sudo systemctl enable nfs-server

$> sudo systemctl enable nfs-lock

$> sudo systemctl enable nfs-idmap

$> sudo systemctl start rpcbind

$> sudo systemctl start nfs-server

$> sudo systemctl start nfs-lock

$> sudo systemctl start nfs-idmap

On Ubuntu 14.04 and CentOS 7

Choose where your shared data and metadata from your local

S3 Server will be stored. We chose to go with /var/nfs/data and /var/nfs/metadata. You also need to set proper sharing permissions for these folders as they’ll be shared over NFS:

$> mkdir -p /var/nfs/data /var/nfs/metadata

$> chmod -R 777 /var/nfs/

Now you need to update your /etc/exports file. This is the file that configures network permissions and rwx permissions for NFS access. By default, Ubuntu applies the no_subtree_check option, so we declared both folders with the same permissions, even though they’re in the same tree:

$> sudo vim /etc/exports

In this file, add the following lines:

/var/nfs/data 10.200.15.96(rw,sync,no_root_squash) 10.200.15.97(rw,sync,no_root_squash)

/var/nfs/metadata 10.200.15.96(rw,sync,no_root_squash) 10.200.15.97(rw,sync,no_root_squash)

Export this new NFS table:

$> sudo exportfs -a

Eventually, you need to allow for NFS mount from Docker volumes on other machines. You need to change the Docker config in /lib/systemd/system/docker.service:

$> sudo vim /lib/systemd/system/docker.service

In this file, change the MountFlags option:

MountFlags=shared

Now you just need to restart the NFS server and docker daemons so your changes apply.

On Ubuntu 14.04

Restart your NFS Server and docker services:

$> sudo service nfs-kernel-server restart

$> sudo service docker restart

On CentOS 7

Restart your NFS Server and docker daemons:

$> sudo systemctl restart nfs-server

$> sudo systemctl daemon-reload

$> sudo systemctl restart docker

Set up your Docker Swarm service

On All Machines

On Ubuntu 14.04 and CentOS 7

We will now set up the Docker volumes that will be mounted to the NFS Server and serve as data and metadata storage for S3 Server. These two commands have to be replicated on all machines:

$> docker volume create --driver local --opt type=nfs --opt o=addr=10.200.15.113,rw --opt device=:/var/nfs/data --name data

$> docker volume create --driver local --opt type=nfs --opt o=addr=10.200.15.113,rw --opt device=:/var/nfs/metadata --name metadata

There is no need to “”docker exec” these volumes to mount them: the Docker Swarm manager will do it when the Docker service will be started.

On Server

To start a Docker service on a Docker Swarm cluster, you first have to initialize that cluster (i.e.: define a manager), then have the workers/nodes join in, and then start the service. Initialize the swarm cluster, and look at the response:

$> docker swarm init --advertise-addr 10.200.15.113

Swarm initialized: current node (db2aqfu3bzfzzs9b1kfeaglmq) is now a manager.

To add a worker to this swarm, run the following command:

docker swarm join \

--token SWMTKN-1-5yxxencrdoelr7mpltljn325uz4v6fe1gojl14lzceij3nujzu-2vfs9u6ipgcq35r90xws3stka \

10.200.15.113:2377

To add a manager to this swarm, run 'docker swarm join-token manager' and follow the instructions.

On Clients

Simply copy/paste the command provided by your docker swarm init. When all goes well, you’ll get something like this:

$> docker swarm join --token SWMTKN-1-5yxxencrdoelr7mpltljn325uz4v6fe1gojl14lzceij3nujzu-2vfs9u6ipgcq35r90xws3stka 10.200.15.113:2377

This node joined a swarm as a worker.

On Server

Start the service on your swarm cluster!

$> docker service create --name s3 --replicas 1 --mount type=volume,source=data,target=/usr/src/app/localData --mount type=volume,source=metadata,target=/usr/src/app/localMetadata -p 8000:8000 scality/s3server

If you run a docker service ls, you should have the following output:

$> docker service ls

ID NAME MODE REPLICAS IMAGE

ocmggza412ft s3 replicated 1/1 scality/s3server:latest

If your service won’t start, consider disabling apparmor/SELinux.

Testing your High Availability S3Server

On All Machines

On Ubuntu 14.04 and CentOS 7

Try to find out where your Scality S3 Server is actually running using the docker ps command. It can be on any node of the swarm cluster, manager or worker. When you find it, you can kill it, with docker stop <container id> and you’ll see it respawn on a different node of the swarm cluster. Now you see, if one of your servers falls, or if docker stops unexpectedly, your end user will still be able to access your local S3 Server.

Troubleshooting

To troubleshoot the service you can run:

$> docker service ps s3

ID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR

0ar81cw4lvv8chafm8pw48wbc s3.1 scality/s3server localhost.localdomain.localdomain Running Running 7 days ago

cvmf3j3bz8w6r4h0lf3pxo6eu \_ s3.1 scality/s3server localhost.localdomain.localdomain Shutdown Failed 7 days ago "task: non-zero exit (137)"

If the error is truncated it is possible to have a more detailed view of the error by inspecting the docker task ID:

$> docker inspect cvmf3j3bz8w6r4h0lf3pxo6eu

Off you go!

Let us know what you use this functionality for, and if you’d like any specific developments around it. Or, even better: come and contribute to our Github repository! We look forward to meeting you!

by Laure Vergeron | Mar 21, 2017 | Data management, Tutorials

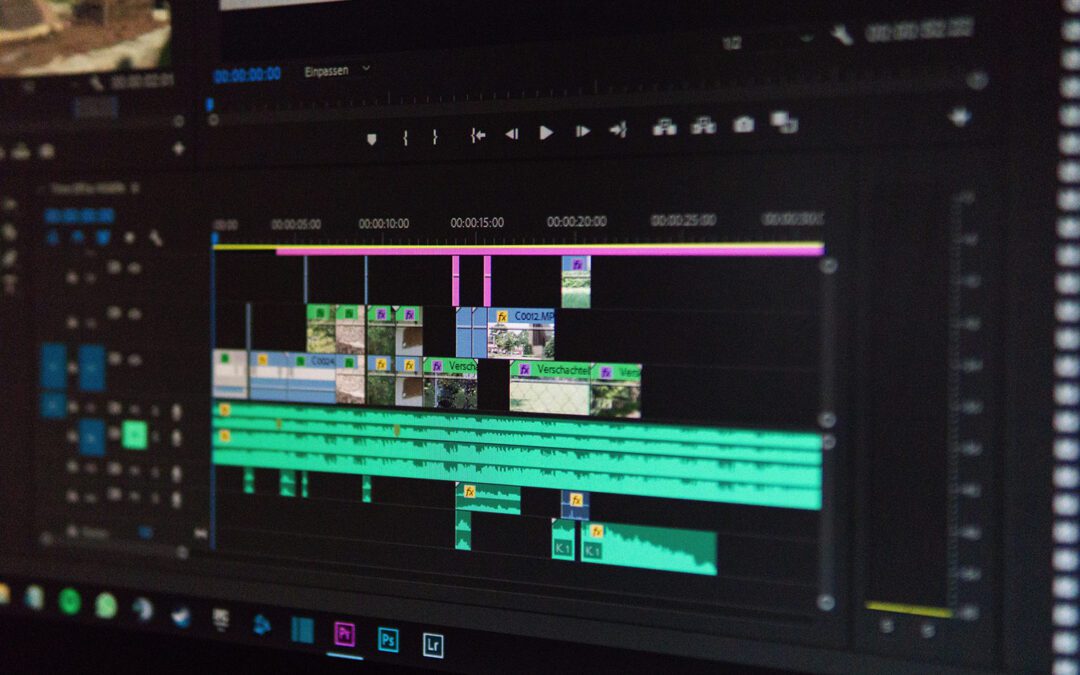

Bitmovin provides a dedicated service, enabling live and on demand encoding of videos into adaptive bitrate formats such as MPEG-DASH and HLS in the cloud. This service comes with a comprehensive API that allows seamless integration into any video workflow.

Recently Bitmovin released a managed on-premises encoding solution on top of Kubernetes and Docker that works for VoD and Live and offers the same features as their cloud encoding service. Managed on-premises encoding offers the benefits of Software as a Service solution while utilizing your own infrastructure.

With the release of their managed on-premises encoding, they also released support for the Scality RING storage, allowing this storage solution to be used in the private cloud. In the following tutorial we will describe how to setup a Scality S3 Server [1] and use it together with the Bitmovin API to download input assets from, and upload the encoded content to.

Setup a Scality S3 Server Storage

In this tutorial we are using the official Scality S3 Server Docker image [2] that allows for a very easy and fast setup. You will need to have Docker installed in order to follow the below steps. We will be using a persistent storage, in order to keep the files we copy to the Scality S3 Server.

With the following command you can start the Scality s3 Server:

sudo docker run -d --name s3server -p 80:8000 \

-e ACCESS_KEY=accessKey1 \

-e SECRET_KEY=verySecretKey1 \

-e HOST_NAME=scality.bitmovin.com \

-v /mnt/s3data:/usr/src/app/localData \

-v /mnt/s3metadata:/usr/src/app/localMetadata

This will launch the Scality S3 Server and bind the service to port 80 on your instance. The two environment variables ACCESS_KEY and SECRET_KEY allow you to setup authentication credentials to access the Scality S3 Server.

If you plan to access the service using a DNS name (e.g., scality.bitmovin.com), you must set the environment variable HOST_NAME accordingly. The service will deny requests if the request headers do not match with this value. Alternatively, you can also use an IP without the need to set any hostname. The volume mounts are used to persistently save the data of the Scality S3 Server to directories in your filesystem.

For more information and configuration options refer to the Docker manual [3] at the Scality GitHub project.

With that we have a running Scality S3 Server up and running. To test it with the Bitmovin API we need to create a bucket and upload a test asset into the bucket. For doing that we can use the generic s3cmd command line tool from AWS.

To access your Scality S3 Server with s3cmd a configuration file is required. The following will show an example of a configuration file that will allow access to the just created Scality S3 Server:

[default]

access_key = accessKey1

secret_key = verySecretKey1

host_base = scality.bitmovin.com:80

host_bucket = %(bucket).scality.bitmovin.com:80

signature_v2 = False

use_https = False

Save the configuration file e.g., as scality.cfg so you can directly use it in your s3cmd command or at ~/.s3cfg in which case you do not need to explicitly specify a configuration file.

Create a bucket with s3cmd:

s3cmd -c scality.cfg mb s3://testbucket

Verify the bucket got created:

s3cmd -c scality.cfg ls

Upload a test asset to the bucket:

s3cmd -c scality.cfg put samplevideo.mp4 s3://testbucket/inputs/

Check if the test asset got correctly uploaded:

s3cmd -c scality.cfg ls s3://testbucket/inputs/

Using Scality with the Bitmovin API

Bitmovin added support for the Scality S3 Server with a generic S3 interface. In the following we will discuss an easy example on how to use a Scality S3 Server for retrieving an input asset, as well as for storing the encoded output back to the Scality S3 server. For the sake of simplicity we will be using the same Scality S3 Server for input and output that we have just created above.

Obviously, you could also use different Scality S3 Servers for input and output.

For this tutorial we will be using Bitmovins PHP API client that already has a neat example [4] of how to use a Scality S3 Server for retrieving an input asset and uploading the encoded data back to the Scality S3 Server.

To get the Bitmovin PHP API Client you can either download it from GitHub [5] or install it using composer. Please see the API clients repository for more information about the setup.

First of all we need to specify all data that is required to run the example. In the following we will be using the data from the above Scality S3 Server that we have just created referencing the uploaded input file samplevideo.mp4. We are also specifying an output folder where the encoded files should be placed:

$scalityHost = ‘scality.bitmovin.com’;

$scalityPort = 80;

$scalityAccessKey = 'accessKey1';

$scalitySecretKey = 'verySecretKey1';

$scalityBucketName = 'testbucket';

$scalityInputPath = "inputs/samplevideo.mp4";

$scalityOutputPrefix = "output/samplevideo/";

Initialize the Bitmovin API Client

$client = new BitmovinClient('INSERT YOUR API KEY HERE');

For initializing the BitmovinClient you need to have an account with Bitmovin and the API key of your account available.

Create an input configuration

We will create an input referencing the samplevideo.mp4 from our Scality S3 Server.

$input = new GenericS3Input($scalityBucketName, $scalityAccessKey, $scalitySecretKey, $scalityHost, $scalityPort, $scalityInputPath);

Create an output configuration

We will create an output configuration that will allow us to store the encoded files to our Scality S3 Server in the output/samplevideo folder.

$output = new GenericS3Output($scalityAccessKey, $scalitySecretKey, $scalityHost, $scalityPort, $scalityBucketName, $scalityOutputPrefix);

Create an encoding profile configuration

An encoding profile configuration contains all the encoding related configurations for video/audio renditions, as well as the encoding environment itself. Choose the region and cloud provider where the encoding should take place. Of course it is optimal if it is close to where your Scality S3 Server is located to reduce the download and upload times 😉 If you are using Bitmovins on-premises feature, you can simply choose your connected Kubernetes cluster instead of a cloud region and the encoding will be scheduled on your own hardware.

$encodingProfile = new EncodingProfileConfig();

$encodingProfile->name = 'Scality Example';

$encodingProfile->cloudRegion = CloudRegion::GOOGLE_EUROPE_WEST_1;

Add video stream configurations to the encoding profile

In the following you will see a configuration for a 1080p H.264 video representation. You will want to add more video representations for your ABR streams as also shown in the example in our GitHub repository.

$videoStreamConfig_1080 = new H264VideoStreamConfig();

$videoStreamConfig_1080->input = $input;

$videoStreamConfig_1080->width = 1920;

$videoStreamConfig_1080->height = 1080;

$videoStreamConfig_1080->bitrate = 4800000;

$encodingProfile->videoStreamConfigs[] = $videoStreamConfig_1080;

Add an audio stream configuration to the encoding profile

$audioConfig = new AudioStreamConfig();

$audioConfig->input = $input;

$audioConfig->bitrate = 128000;

$audioConfig->name = 'English';

$audioConfig->lang = 'en';

$audioConfig->position = 1;

$encodingProfile->audioStreamConfigs[] = $audioConfig;

Create encoding job and start it

The JobConfig acts as a container for all the previously created configurations. Here we also define that we want to have MPEG-DASH and HLS output created. The JobConfig object will be passed to the BitmovinClient, that will then start the encoding job and wait until it is finished.

$jobConfig = new JobConfig();

$jobConfig->output = $output;

$jobConfig->encodingProfile = $encodingProfile;

$jobConfig->outputFormat[] = new DashOutputFormat();

$jobConfig->outputFormat[] = new HlsOutputFormat();

$client->runJobAndWaitForCompletion($jobConfig);

After the encoding job has finished you should have all encoded files for MPEG-DASH and HLS with the manifests on your Scality S3 Server. When using Scality you can simply access the files with HTTP. For the above example the HTTP links would be as follows:

MPEG-DASH: http://scality.bitmovin.com/output/samplevideo/stream.mpd

HLS: http://scality.bitmovin.com/output/samplevideo/stream.m3u8

[1] The Scality S3 Server is an open-source object storage project to enable on-premise S3-based application development and data deployment choice

[2] https://hub.docker.com/r/scality/s3server/

[3] https://github.com/scality/S3/blob/master/DOCKER.md

[4] https://github.com/bitmovin/bitmovin-php/blob/master/examples/CreateSimpleEncodingWithScalityInputAndOutput.php

[5] https://github.com/bitmovin/bitmovin-php

Thanks to the Bitmovin R&D Team for their contribution!

by Laure Vergeron | Mar 15, 2017 | Data management, Tutorials

Installing

Deploying CloudServer

First, you need to deploy CloudServer (formerly called S3 Server). This can be done very easily via our DockerHub page (you want to run it with a file backend).

Note:

– If you don’t have docker installed on your machine, here are the instructions to install it for your distribution

Installing Duplicity and its dependencies

Second, you want to install Duplicity. You have to download this tarball, decompress it, and then checkout the README inside, which will give you a list of dependencies to install. If you’re using Ubuntu 14.04, this is your lucky day: here is a lazy step by step install.

$> apt-get install librsync-dev gnupg

$> apt-get install python-dev python-pip python-lockfile

$> pip install -U boto

Then you want to actually install Duplicity:

$> tar zxvf duplicity-0.7.11.tar.gz

$> cd duplicity-0.7.11

$> python setup.py install

Using

Testing your installation

First, we’re just going to quickly check that S3 Server is actually running. To do so, simply run $> docker ps . You should see one container named zenko/cloudserver. If that is not the case, try $> docker start cloudserver, and check again.

Secondly, as you probably know, Duplicity uses a module called Boto to send requests to S3. Boto requires a configuration file located in /etc/boto.cfg to have your credentials and preferences. Here is a minimalistic config that you can finetune following these instructions.

[Credentials]

aws_access_key_id = accessKey1

aws_secret_access_key = verySecretKey1

[Boto]

# If using SSL, set to True

is_secure = False

# If using SSL, unmute and provide absolute path to local CA certificate

# ca_certificates_file = /absolute/path/to/ca.crt

Note:

If you want to set up SSL with S3 Server, check out our tutorial

At this point, we’ve met all the requirements to start running S3 Server as a backend to Duplicity. So we should be able to back up a local folder/file to local S3. Let’s try with the duplicity decompressed folder:

$> duplicity duplicity-0.7.11 "s3://127.0.0.1:8000/testbucket/"

Note:

Duplicity will prompt you for a symmetric encryption passphrase. Save it somewhere as you will need it to recover your data. Alternatively, you can also add the --no-encryption flag and the data will be stored plain.

If this command is succesful, you will get an output looking like this:

--------------[ Backup Statistics ]--------------

StartTime 1486486547.13 (Tue Feb 7 16:55:47 2017)

EndTime 1486486547.40 (Tue Feb 7 16:55:47 2017)

ElapsedTime 0.27 (0.27 seconds)

SourceFiles 388

SourceFileSize 6634529 (6.33 MB)

NewFiles 388

NewFileSize 6634529 (6.33 MB)

DeletedFiles 0

ChangedFiles 0

ChangedFileSize 0 (0 bytes)

ChangedDeltaSize 0 (0 bytes)

DeltaEntries 388

RawDeltaSize 6392865 (6.10 MB)

TotalDestinationSizeChange 2003677 (1.91 MB)

Errors 0

-------------------------------------------------

Congratulations! You can now backup to your local S3 through duplicity 🙂

Automating backups

Now you probably want to back up your files periodically. The easiest way to do this is to write a bash script and add it to your crontab. Here is my suggestion for such a file:

#!/bin/bash

# Export your passphrase so you don't have to type anything

export PASSPHRASE="mypassphrase"

# If you want to use a GPG Key, put it here and unmute the line below

#GPG_KEY=

# Define your backup bucket, with localhost specified

DEST="s3://127.0.0.1:8000/testbuckets3server/"

# Define the absolute path to the folder you want to backup

SOURCE=/root/testfolder

# Set to "full" for full backups, and "incremental" for incremental backups

# Warning: you have to perform one full backup befor you can perform

# incremental ones on top of it

FULL=incremental

# How long to keep backups for; if you don't want to delete old backups, keep

# empty; otherwise, syntax is "1Y" for one year, "1M" for one month, "1D" for

# one day

OLDER_THAN="1Y"

# is_running checks whether duplicity is currently completing a task

is_running=$(ps -ef | grep duplicity | grep python | wc -l)

# If duplicity is already completing a task, this will simply not run

if [ $is_running -eq 0 ]; then

echo "Backup for ${SOURCE} started"

# If you want to delete backups older than a certain time, we do it here

if [ "$OLDER_THAN" != "" ]; then

echo "Removing backups older than ${OLDER_THAN}"

duplicity remove-older-than ${OLDER_THAN} ${DEST}

fi

# This is where the actual backup takes place

echo "Backing up ${SOURCE}..."

duplicity ${FULL} \

${SOURCE} ${DEST}

# If you're using GPG, paste this in the command above

# --encrypt-key=${GPG_KEY} --sign-key=${GPG_KEY} \

# If you want to exclude a subfolder/file, put it below and paste this

# in the command above

# --exclude=/${SOURCE}/path_to_exclude \

echo "Backup for ${SOURCE} complete"

echo "------------------------------------"

fi

# Forget the passphrase...

unset PASSPHRASE

So let’s say you put this file in /usr/local/sbin/backup.sh. Next you want to run crontab -e and paste your configuration in the file that opens. If you’re unfamiliar with Cron, here is a good How To.

The folder I’m backing up is a folder I modify permanently during my workday, so I want incremental backups every 5mn from 8AM to 9PM monday to friday. Here is the line I will paste in my crontab:

*/5 8-20 * * 1-5 /usr/local/sbin/backup.sh

Now I can try and add / remove files from the folder I’m backing up, and I will see incremental backups in my bucket.